Project Description

With the ultimate goal to develop responsible AI products/services, FIAAI is a two-staged AI Impact assessment that helps companies by means of governmental frameworks and the participation of the public involved in an impact assessment game to work in a more iterative manner towards ethical implementation of emerging technologies.

Challenge

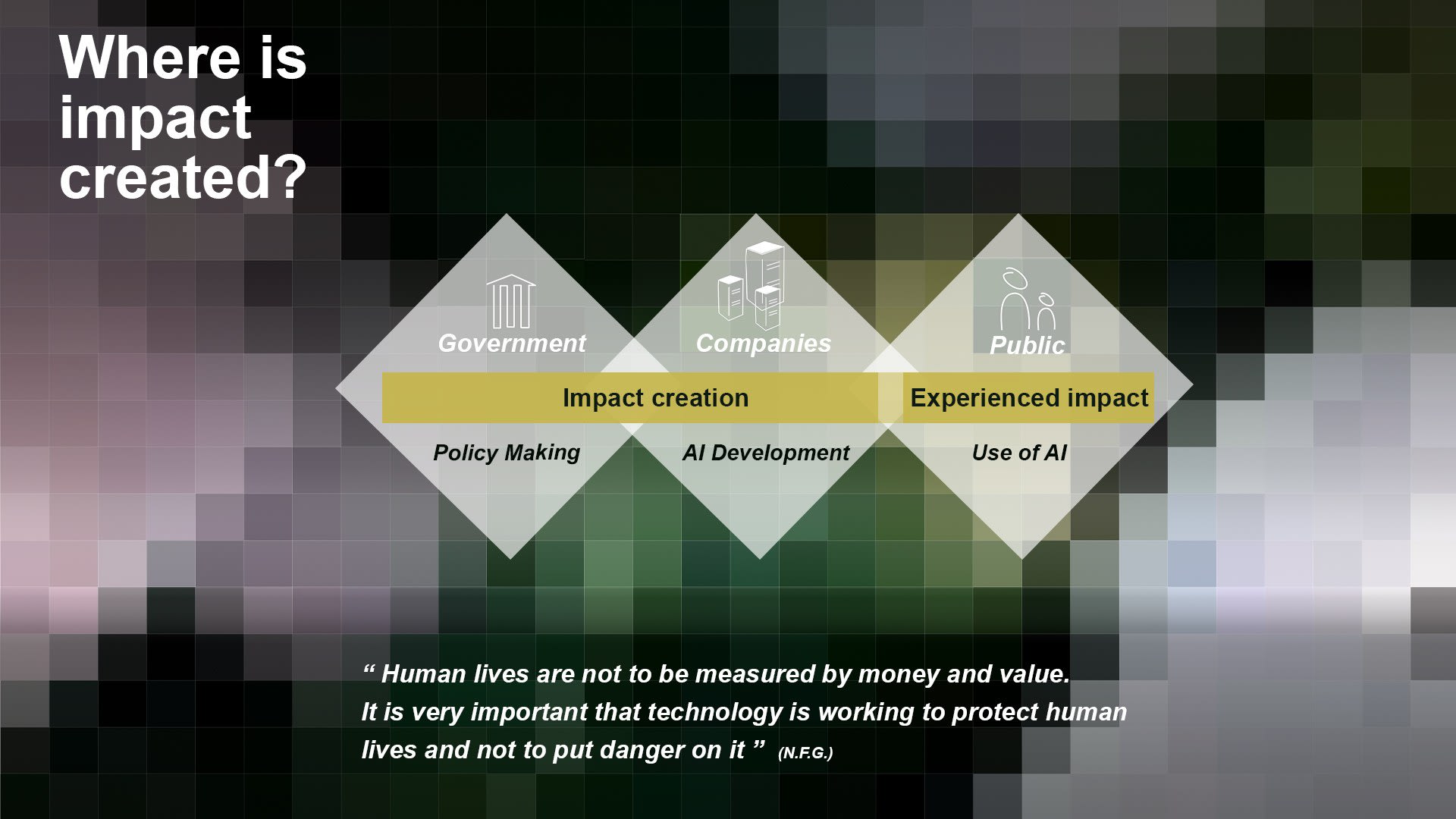

AI (Artificial Intelligence) is increasingly, but invisible & silent, a part of our everyday lives. “ Is there a need to explain AI? You probably don’t care as long as AI systems do a good job” - But what if they don’t? In this case experienced unintended, negative, short or long term impacts can have different levels of consequences on people, their direct and indirect surroundings as well as the providing companies and the environment. Examples can be a wrong diagnosis in healthcare, a biased AI that selects just certain kinds of people for an application process and more. So why should we explain impact once something went wrong if we could avoid it happening in the first place? Where does impact come from? How is it assessed at the moment? Tracking this back, led to tech companies and governmental frameworks that aim to guide product development.

Looking Beyond The Known

But being provided with governmental frameworks is just one side of the coin. Insights showed, that people in charge to implement policy into products/services feel often insecure. They struggle with the usability of policy text. Timing, scope, structure, content, language and embodiment are reasons why policy might be not effectively translated and actionable for companies. And with regards to a more socio-technical impact assessment interviewees found it hard to go beyond known risks as the e.g. DPIA (Data Protection Impact Assessment) framework has a strong focus on data and doesn’t provide any examples. Therefore the question is: How can companies be enabled to do a socio-technical AI impact assessment informed by actionable policy?

FIAAI

FIAAI (Foresight Impact Assessment for AI) is a two-staged tool from regulators for companies. It consists of a platform for impact self-assessment and a game to test wider social implications by the public in order to help companies developing responsible AI products.

In the first phase, companies are being provided with access to a government platform where they answer questions related to their AI models. This way they can self assess whether their AI product would categorize as a high or low-risk model and policy recommendations could be made in a more targeted way.

High risk assessed companies would move on into phase two, where the companies would upload their models into a digital twin which would be a testing environment in form of a game that will be played by the public. People would select AI models as challenges to be gamed and feedback on the impacts they experience.

Phase 1+2

The first phase would be a set of questions regarding the intended model development. These questions ask for general information about the model and its purpose, in which countries it shall be deployed, information about data and policy that is already in use when developing a model. The given answers would then select appropriate policy recommendations and provide the companies with relevant information.

The second phase gives people the chance to select between scenario creation, playing scenarios and feedbacking other assessments to double-check responsible gaming.

In the beginning, every challenge would inform about the model's purpose, data being used for features. This would have a highly educative function for the people playing the game.

The gathered feedback would inform the companies development in an iterative manner until the AI model gets approved by the game.

Certified By Users

Every AI model that succeeds the FIAAI testing receives a seal and gets listed with the version accordingly on the FIAAI website.

This listing would be online accessible for the public and be used as a seal of quality.

![[untitled]](https://res.cloudinary.com/rca2020/image/upload/f_auto,h_1076,w_1920,c_fill,g_auto,q_auto/v1/rca2021/60c4ae91a98c7847e5591638-405598?_a=AXAH4S10)

![[untitled]](https://res.cloudinary.com/rca2020/image/upload/f_auto,h_1079,w_1920,c_fill,g_auto,q_auto/v1/rca2021/60c4ae91a98c7847e5591638-529683?_a=AXAH4S10)

![[untitled]](https://res.cloudinary.com/rca2020/image/upload/f_auto,h_1084,w_1920,c_fill,g_auto,q_auto/v1/rca2021/60c4ae91a98c7847e5591638-222496?_a=AXAH4S10)

![[untitled]](https://res.cloudinary.com/rca2020/image/upload/f_auto,h_1084,w_1920,c_fill,g_auto,q_auto/v1/rca2021/60c4ae91a98c7847e5591638-67853?_a=AXAH4S10)

![[untitled]](https://res.cloudinary.com/rca2020/image/upload/f_auto,h_1076,w_1920,c_fill,g_auto,q_auto/v1/rca2021/60c5d6f7a98c7847e5da4fa8-404926?_a=AXAH4S10)

![[untitled]](https://res.cloudinary.com/rca2020/image/upload/f_auto,h_1080,w_1920,c_fill,g_auto,q_auto/v1/rca2021/60c5d7d1a98c7847e5de83c3-616194?_a=AXAH4S10)